Running cloud services on your local machine is often a problem because there is no local version available. Thankfully DynamoDB provides a local version of their database. This makes unit testing cloud services a lot easier if you’re relying on DynamoDB. Unfortunately, setting up DynamoDB Local and combining it with Testcontainers and Bitbucket Pipelines in your automated tests can lead to some headache. This blog post explains all required steps with Java and helps with typical pitfalls.

All the code is available in a Bitbucket repository providing various examples how to combine the approaches below. Just go to https://bitbucket.org/sebastianhesse/java-dynamodb-local-automated-testing and have a look 😊 This blog post is separated into the following parts:

- Setting up DynamoDB Local

- Automatically Start DynamoDB Local Before Running the Tests

- Optional: Integrating Spring Framework

- Executing the Tests on Bitbucket Pipelines

Setting up DynamoDB Local

First, you need to setup DynamoDB Local. It’s a local version of the popular AWS database that you can install on your local machine. You can choose between a “real” local installation, a Maven dependency or a Docker image. All options will start a local instance of DynamoDB and you can connect to it using the AWS SDK. For the next sections the DynamoDB Local Docker image is used. Running it locally only requires this command:

docker run -p 8000:8000 amazon/dynamodb-localIt spins up a Docker container and makes DynamoDB available on port 8000. Then, you only need to adapt the DynamoDB client from the AWS SDK and point it to the localhost endpoint:

AwsClientBuilder.EndpointConfiguration endpointConfig =

AwsClientBuilder.EndpointConfiguration("http://localhost:8000",

"us-west-2");

AmazonDynamoDB dynamodb = AmazonDynamoDBClientBuilder.standard()

.withEndpointConfiguration(endpointConfig)

.build();If you want, you can further customize DynamoDB Local as described in the usage notes. For example, you can change the port.

Automatically Start DynamoDB Local Before Running The Tests

Since you don’t want to start a Docker container each time you run a unit test, you need to automate this step. For this case, Testcontainers is a really good library that can be integrated into your automated tests. It allows you starting Docker containers, e.g. before running a JUnit test. To start a DynamoDB Local instance, simply add the following code to your tests:

// This declaration will start a DynamoDB Local Docker container before the unit tests run ->

// make sure to specify the exposed port as 8000, otherwise the port

// mapping will be wrong -> see the docs: https://hub.docker.com/r/amazon/dynamodb-local

@ClassRule

public static GenericContainer dynamoDBLocal =

new GenericContainer("amazon/dynamodb-local:1.11.477")

.withExposedPorts(8000);This code is using JUnit 4’s @ClassRule annotation to trigger the Testcontainers start process. Testcontainers will automatically start the given Docker container by choosing a free port on your system and mapping it to 8000 (DynamoDB Local’s default port). Since the free port can be different each time you trigger this code, you need to retrieve the port. The following code allows you to build the local endpoint url for accessing the local DynamoDB instance:

private String buildEndpointUrl() {

return "http://localhost:" + dynamoDBLocal.getFirstMappedPort();

}Then use the endpoint url to adapt the AWS SDK configuration as described above and run your tests. You can find a fully working example of this test in the DynamoDBLocalTest.java file in my Bitbucket repository.

Optional: Integrating Spring Framework

In the Java world a lot of people are using the Spring Framework. Not only for managing dependencies but also for many other things to reduce boilerplate code. If you use Spring, testing your Spring related code is necessary as well. However, when combining Spring with DynamoDB Local and Testcontainers you have to clean the database each time a new test runs. To save you some time, here’s the trick how to do it:

@RunWith(SpringJUnit4ClassRunner.class)

@DirtiesContext(classMode = DirtiesContext.ClassMode.BEFORE_CLASS)

public class YourTestClass {

// specify DynamoDB Local using Testcontainers

@ClassRule public static GenericContainer dynamoDBLocal =

new GenericContainer("amazon/dynamodb-local:1.11.477")

.withExposedPorts(8000);

// Your test code follows here ...

}The Spring Test module offers a @DirtiesContext annotation that marks a Spring context as dirty. Marking it as dirty will reload the Spring context and thus, starts a new Docker container of DynamoDB Local. Marking it as dirty can happen after each class or method run or even on a package level. Just have a look at the JavaDocs of @DirtiesContext for further information.

This is really helpful when dealing with Spring in your tests. If you have other ideas how to solve that, please leave a comment below 💬

[mailjet_subscribe widget_id=”3″]

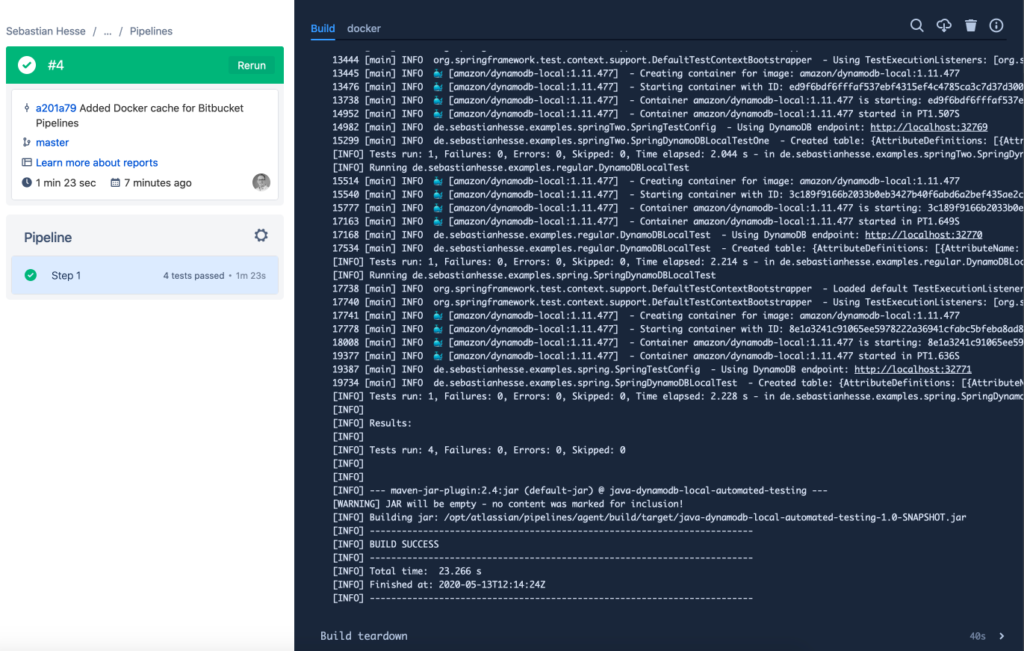

Executing the Tests on Bitbucket Pipelines

Now we’re getting to the most interesting part: put everything together and run it on Bitbucket Pipelines. Before we do that, we need to configure Bitbucket Pipelines using a file called bitbucket-pipelines.yml:

# You can change the image but make sure it supports Docker, Java and Maven.

image: k15t/bitbucket-pipeline-build-java:2020-01-09

pipelines:

default:

- step:

caches:

- maven

- docker

script:

# this is the Maven command to be executed

- mvn -B verify

options:

docker: trueThe configuration above defines a default pipeline that runs on each commit. It’s using a Maven and Docker cache, so the execution takes less time except the first time it runs. One important option is to enable Docker by using docker: true. It allows you running Docker commands within Bitbucket Pipelines. This is necessary for Testcontainers to start a Docker container.

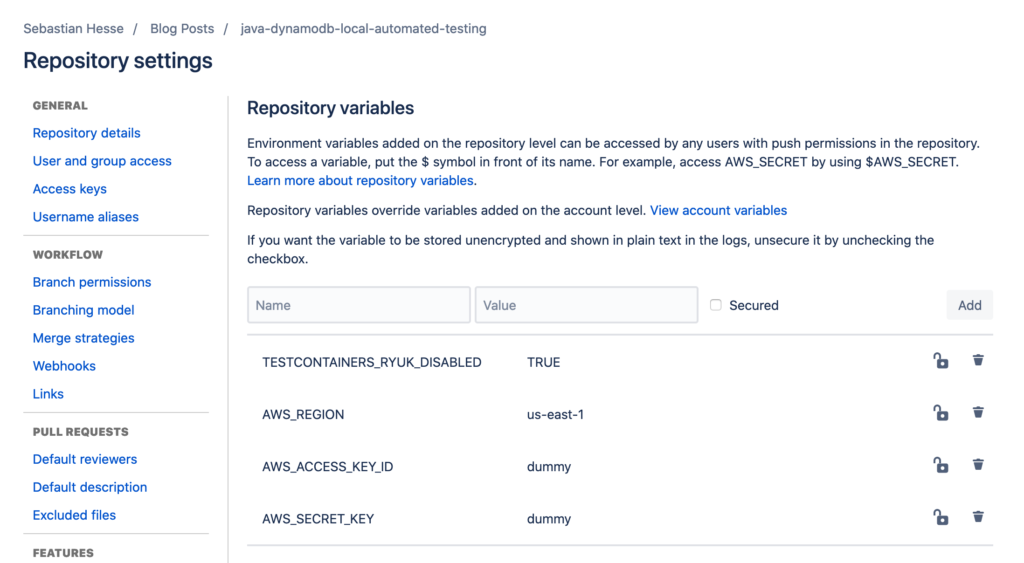

Disabling Ryuk

If you run this setup the first time, you’ll likely encounter the following error message:

[testcontainers-ryuk] WARN org.testcontainers.utility.ResourceReaper - Can not connect to Ryuk at localhost:32768

java.net.ConnectException: Connection refused (Connection refused)Ryuk is a service to clean up the containers after they’ve been used. Since Bitbucket Pipelines will throw away the pipeline environment after some time, you can disable this feature. Therefore, in your Bitbucket Pipelines environment settings just set TESTCONTAINERS_RYUK_DISABLED to TRUE.

Proper Setup of AWS SDK

After disabling Ryuk, run the Pipeline again. You’ll see another error:

com.amazonaws.SdkClientException: Unable to load AWS credentials from any provider in the chain: [EnvironmentVariableCredentialsProvider: Unable to load AWS credentials from environment variables (AWS_ACCESS_KEY_ID (or AWS_ACCESS_KEY) and AWS_SECRET_KEY (or AWS_SECRET_ACCESS_KEY)), SystemPropertiesCredentialsProvider: Unable to load AWS credentials from Java system properties (aws.accessKeyId and aws.secretKey), WebIdentityTokenCredentialsProvider: To use assume role profiles the aws-java-sdk-sts module must be on the class path., com.amazonaws.auth.profile.ProfileCredentialsProvider@3c443976: profile file cannot be null, com.amazonaws.auth.EC2ContainerCredentialsProviderWrapper@4ee33af7: Failed to connect to service endpoint: ]

at de.sebastianhesse.examples.regular.DynamoDBLocalTest.createCreateDynamoDBTable(DynamoDBLocalTest.java:118)

at de.sebastianhesse.examples.regular.DynamoDBLocalTest.connectionSuccessful(DynamoDBLocalTest.java:48)This error tells you that the AWS SDK is not able to load any AWS credentials. You might wonder why this is necessary because you’re connecting to a local DynamoDB 🤔 Well, even though you’re only opening a local connection and you don’t need credentials, the AWS SDK does not care about it. Thus, you need to properly configure the AWS SDK. However, it’s enough to add some dummy data for AWS_ACCESS_KEY_ID and AWS_SECRET_KEY environment variables and run the Pipeline again. Now, you’ll most likely see another error:

Unable to find a region via the region provider chain. Must provide an explicit region in the builder or setup environment to supply a region.This is a similar problem like the previous one. Even though you can use any region for connecting to a DynamoDB Local instance, you have to tell the AWS SDK a default region. Simply add another environment variable for AWS_DEFAULT_REGION and set it to us-east-1. In the end, you should have configured the following environment variables:

Summary

With this setup, you’re prepared for extending your automated tests using Java, JUnit, DynamoDB Local, Testcontainers and Bitbucket Pipelines 🎉 I’m looking forward to your feedback about this topic! How do you run your automated tests? Have you ever used Bitbucket Pipelines for this?

You can also use this setup when working with serverless functions that load and save data from/to DynamoDB. A great example GitHub repository is aws-sam-java-rest containing many examples for serverless functions. If you struggle writing serverless functions, read my blog posts about Going Serverless. In this context, it’s also important to not load the data from the database each time. The article Caching in AWS Lambda presents best practices how you properly cache data in serverless functions.